Speed up xz compression serial#

Compression and decompression are inherently serial operations. This is unfortunate in itself but it has an even bigger downside. Accessing byte n requires unpacking every byte from the beginning of the file. Wikipedia has further details.Ĭompression makes this even worse. This is a simple and reliable format but it has a big downside: individual entries can not be accessed without processing the entire file from the beginning. It consists of consecutive file metadata and content pairs. Basically it is a stream format for describing files. It does one thing and it does it quite well. It has been in use, basically unchanged, since 1979.

Your needs may vary but every time I’ve looked at the “cost” in time for compressing with xz at higher modes is that it pays off in either network transfer or space savings later.Tar is one of the basic tools of Unix. e is also faster than -9 on this input data, so I’m not sure I buy your much much slower argument without some sort of examples.Īnd to be fairish and compare using pigz -9 cause I can’t be bothered with single threaded compression of a 20GiB file: $ time pigz -9v -k input.dataĪ few minutes more cpu time (less on actual non laptop hardware) for 600-900MiB reduction seems well worth it to me.

The fun part here is -e is closer to -6 on this random bit of data I snagged versus -9, but normally -e wins out, though for this specific instance now I kinda want to change our ci to do 6/9/e and pick the smallest of the three. who cares? Example on a roughly 20GiB file of mixed compressibility, the majority of the end being super compressible (lots of zeros), actually this is an actual data file that will get transferred a lot after build so saving any size at the expense of a bit of cpu time up front only makes sense to me. Xz’ -e usually doesn’t gain you much however but makes compression much much slower. This makes it possible to generate binary diffs which are almost as good as bsdiff’s but much more economical to generate and use.Įdit: and one configuration of xz that is often very interesting is a low compression preset but a regular or even large dictionary: –lzma2=preset=0,dict=64M is often surprisingly fast and efficient. The most interesting recent development of zstd is the ability to train it on a file and the compress another file. There’s something that zstd has and xz doesn’t however: lots and lots of steam. I know that ArchLinux has switched to zstd but xz decompression (especially at high compression since they make decompression faster as mentionned in another comment from me) is probably able to saturate storage I/O. The winner was “still” xz because it could compress more, slightly faster and the bottleneck was the transfer of the compressed file. Choice of relevant and representative data is a whole another issue.įairly recently I’ve done some benchmarks with xz and zstd for an embedded disk image on ubuntu 18.04 (meaning zstd isn’t the most recent version available even though it’s not horribly outdated).

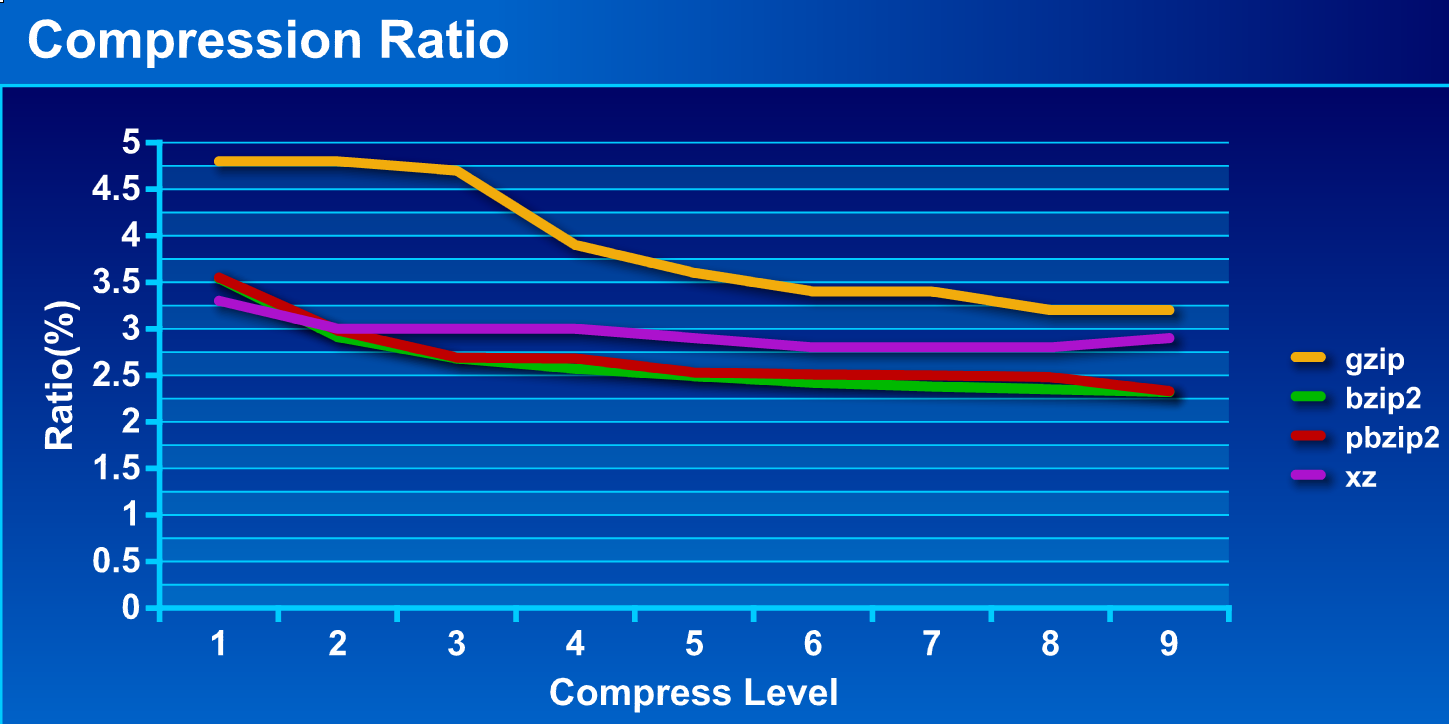

Such graph can then be used to answer questions such as “of the slow compressors, which one gives the smallest file” or “is compressor A better than compressor B for all of their speed settings” or “which one is the fastest to compress better than a given ratio”.īTW: this is orthogonal to choosing what data to compress in the benchmark. The proper way to compare compression algorithms is to graph curves created by (time to compress, output size) points of all their speed settings. But if you compare cases and one gave larger file, but other finished faster, then it’s inconclusive. Valid comparisons are “smallest file given same time” and “fastest to compress to specified size”. When you have two variables ( speed and compression), and you don’t equalize one of the variables then the results are not comparable. The -1.9 flags are not equivalent for different tools, so even running them all with same command-line flag is not fair. both smallerand faster at the same time).įor most algorithms compression ratio is variable, so they can be as fast or as slow as you tell them, which makes it too easy to create inconclusive or outright misleading results. A single table where both output sizes and compression times differ is not a valid way to compare compressors, except cases that show a Pareto improvement (i.e.

0 kommentar(er)

0 kommentar(er)